- All Azure Services can be configured to send service logs to a specific App Insight instance.

- Instrument packages can be added to services to capture logs such as IIS, or background services. You can pull in telemetry from infrastructure into App insights e.g. Docker logs, system events.

- Custom code can also call the App Insight instance to add logging and hook into exceptions handling. There are .NET, Node.JS, Python and other SDK's that should e used to add logging, exception capturing, performance and usage statistics.

"What kinds of data are collected?

The main categories are:

- Web server telemetry - HTTP requests. Uri, time taken to process the request, response code, client IP address.

Session id. - Web pages - Page, user and session counts. Page load times. Exceptions. Ajax calls.

- Performance counters - Memory, CPU, IO, Network occupancy.

- Client and server context - OS, locale, device type, browser, screen resolution.

- Exceptions and crashes - stack dumps,

build id, CPU type. - Dependencies - calls to external services such as REST, SQL, AJAX. URI or connection string, duration, success, command.

- Availability tests - duration of test and steps, responses.

- Trace logs and custom telemetry - anything you code into your logs or telemetry."

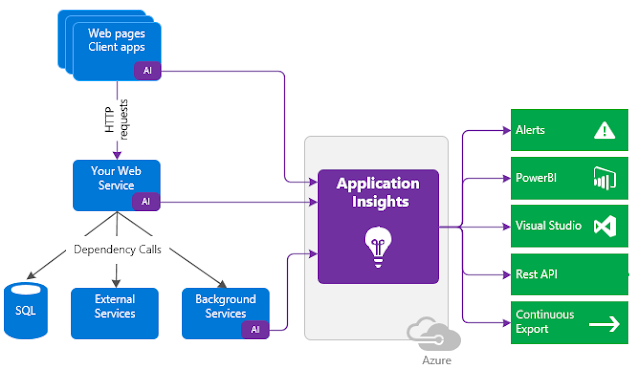

- Collect: Track infra/PaaS via instrumentation (throughput, speed, response times, failure rates, exceptions etc.), and via SDK (e.g. JavaScript SDK, C#) to add custom logging and tracing. Blue boxes

- Store: Stores the data. Purple Box

- Insights: Alerts, PowerBI, live metrics, REST API. Green Box