Overview: Microsoft provides a useful list that allows me to know AWS services aligned to Azure Services. This is pretty useful if you know 1 platform considerably better than another to quickly figure out your options on either AWS or Azure.

My Service comparison notes:

Amazon CloudWatch - same as Azure Monitor.

Amazon Relational Database Service (RDS) – SQL

Server, Oracle, MySQL, PostGress and Aurora (Amazon’s proprietary

database).

Azure SQL lines up with Amazon's RDS SQL Server Service. Although Aurora is probably also worth the comparison as it's AWS's native DB option. AWS Aurora is more performant and allows more scale, has an amazing five nine (99.999) availability SLA. Aurora Serverless competes directly with Azure SQL. AWS RDS is excellent and much better than MySQL or PostgreSQL in terms of performance.

Amazon DynomoDB is the same as CosmosDB, which is the NoSQL database.

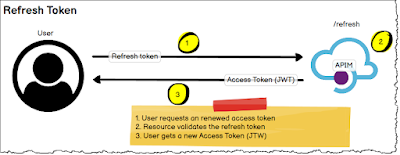

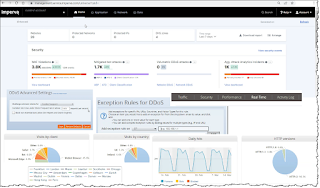

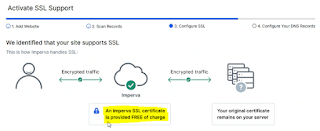

AWS API Gateway - Azure API Management

Amazon Redshift is the data warehouse. It can be encrypted and isolated. Support Petabytes of data.

Amazon ElastiCache run Redis cache and MemCached

(simple cache).

AWS Lamda – Azure Functions. i.e. Serverless.

AWS Elastic Beanstalk – Platform for deploying and scaling

web apps & Services. Same as Azure

App services.

Amazon SNS – Pub/Sub model – Azure Event Grid.

Amazon SQS – Message queue.

Same as Azure Storage Queues and Azure Service Bus.

Amazon Step Functions – Workflow. Same as logic apps

AWS

Snowball –

Same as Azure Box. Physically copy and transport to data centre for upload.

Virtual

Private Cloud (VPC)

– Azure virtual network

Amazon AppStream - Azure VDI (Virtual desktop) I think.

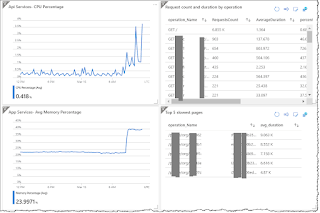

Amazon QuickSight - Power BI (Tableau Business Intelligence).

AWS CloudFormations - ARM and Bicep

Tip: I am glad that I did the AWS Certified Cloud Practitioner exam as it helped my understand of the AWS offering which has been very useful in large integration projects. I have worked with AWS IaaS (EC2, API gateway and S3 historically). Like Azure, there are a lot of Services and features. Basically, there are equivilant services for Azure & AWS. It may be a 2-to-1 service offering or it is not something offered by the cloud provider.