The goal of Solution Architecture is to:

To achieve a common understanding of how a technical solution will be reached, diagrams are useful. They also ensure a communicable roadmap and its completion. Later, the diagrams are used to ensure all relevant parties have a clear, unambiguous, shared understanding of the IT solution.

The main tools for communicating the architectural solution design are diagrams and documents that utilise common previously used and understood patterns to ensure a safe, scalable, stable, performant, and maintainable solution. "

4+1 view, which includes the scenario, logical, physical, process, and development views of the architecture", source.

Below are patterns and thoughts I have encountered and used to solve building solutions.

3/N Tier Architecture/Layered:

1) Presentation/UI layer

2) Business Logic

3) Data Layer/Data source

Here are a couple of possible examples over the years that you could have used

ASP > C++ Com > SQL Server 2000

ASP.NET (Web Forms) > C# Web Service (XML/SOAP) > SQL Server 2008

ASP.NET C# > C# Business Object Layer > SQL Server 2008

KO > MVC > SQL 2012

Angular 3 > C# Web API (swagger contract) > SQL 2016

REACT.JS > Node.JS > Amazon Redshift

UI > Azure Functions/Serverless > SQL Azure

Flutter > C# Web API .NET Core 3 (swagger/OpenAPI) published on Azure App Service > SQL Azure/Cosmos

API's: Over the years, I have seen many different API's at a high level:

- Proprietary formatted API's >

- XML with SOAP coming out of XML based API's >

- REST/JSON (other popular formats are: RAML, GraphQL >

- Event-Driven APIs may be the next big jump.

Thoughts: As time has progressed, scaling each of these layers has become easier. For instance, Azure SQL has replication and high availability, and scalability automatically built in. No need to think about load balancing in depth. Plug and play and ask for more if you need it.

Microsoft SQL Server used to be a single server, then came replication, clustering, Always-on-availability, scaling greatly improved performance.

Middle Tier or Business layer use to be a singleton pattern - go thru a single server for business logic, slowly load balancing improved and caching become better. Nowadays merely ramp on on you cloud provider.

Sharded Architecture: An Application is broken into many distinct units/shards. Each shard lives in total isolation from the other shards. Think

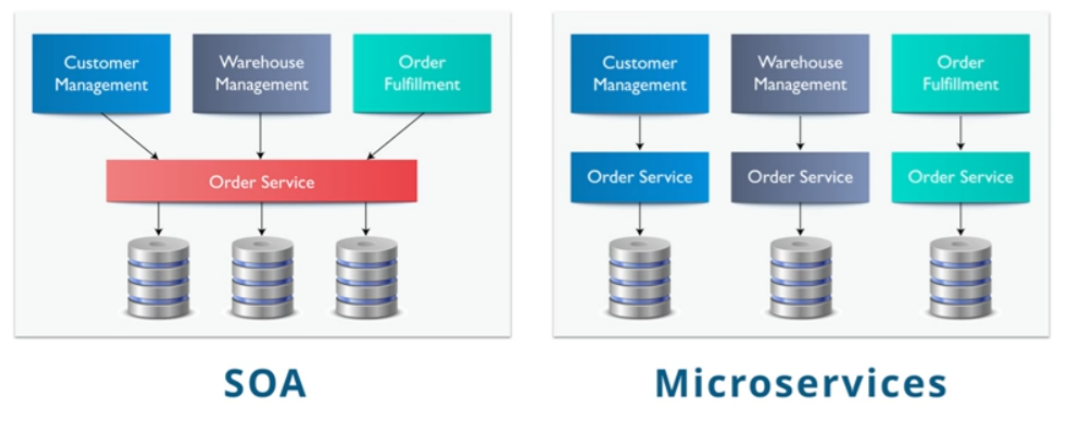

SOA or Microservice architectures often use this approach. "SOA is focused on application service reusability while Microservices are more focused on decoupling".

|

| Source: https://kkimsangheon.github.io |

The problem with tight coupling multiple services are:

- Complexity - It is difficult to change code and know the effects. Also, services need to be deployed together to test changes.

- Resilience - Service goes down, the whole suite goes down.

- Scalability - This can be an issue as the slowest component becomes the bottleneck.

For instance, build a complete application to handle ordering and a separate system that handles inventory. So both could be in different data stores, so let's say orders are on CosmosDB and Inventory is on Azure SQL. Some of the inventory data is static in nature, so I decided to use App Caching (Redis). Both data sources are based on independent server-less infrastructure. So, if you see inventory has an issue, merely scale it. The front-end store would seamlessly connect to both separately. "Sharding" databases/horizontal partitioning is a similar concept, but only at the database level. Sharding can be highly scaleable, allow for leveraging and reusing existing services, and can be flexible as it grows. Watch out for 2 Phase Commit (2PC/Sagas/Distribute transactions)

Thoughts Pros: - Developers & Teams can work independently

- Great to reuse existing services instead of creating them yourself. e.g. App Insights on Azure.

-

Great for high availability and targeted scalability.

- Zero trust security. Explicitly Verify, Least privilege access. Assume breach. Implementation Defence in depth. Monitor & Analyse for threat protection.

- Focused costs by scaling the individual microservices.

Cons:- Services need to be independent, or deployment becomes a challenge.

- Increased latency - you may need to go to various systems sequentially.

-

Need keys to manage, e.g., clientId for this decouple architecture type. This architecture can also become complex, especially if you need to expand a shard to do something it doesn't do today.

-

Data aggregation and ETL can become complex and have time delays.

- Referential integrity, guaranteed commit is an issue, we can use SAGA or 2PC to improve, but not ACID.

- Rules for strict governance and communication between teams are needed.

- Monitoring and troubleshooting can be challenging. Build a traceable service (App insights for finding and understanding issues, needs to be polyfilled for long-running operations, SPA's need unique correlationIds)

Event-driven architecture: The client sends a request that includes a response for the server to contact when the event happens. So if the client is asking a server to perform a complex calculation, the client could keep polling a long-running operation until the server has the answer, or use an event-driven architecture. Can you pls calculate, and when you are done, send the response to me at... Types of Event-driven architectures are: WebHooks, WekSockets, ESB (pub-sub), Server Sent Events (SSE).

They are loosely coupled and run only when an event occurs. In Azure, they generally cover Functions, Logic Apps, Event Grid (event broker), and APIM. They are easy to connect using Power Platform Connectors.

- Client/Service sends a broadcast event.

- Consumers listen for events to see if they want to use the event.

Hexagonal Architecture - Related/founder to Microservices,

Command Query Responsibility Segregation (CQRS) - pattern/method for querying and inserting data are different./seperated. This is a performance and scaling pattern.

Domain-Driven Design (DDD) - Design software in line with business requirements. The code's structure and language must match the business domain. DDD Diagrams help create a shared understanding of the problem space/domain to aid in conversation and further learning within the team. "Bounded Context is a central pattern in Domain-Driven Design. It is the focus of DDD's strategic design section, which deals with large models and teams. DDD deals with large models by dividing them into different Bounded Contexts and being explicit about their interrelationships." Martin Fowler.

RACI Diagram - a visual diagram showing the functional role of each person on a team or service. Useful for seeing who is responsible for what part of a service or their role within a team.

Event Sourcing Pattern - Used for event-based architecture

AMQP is a standard for passing business messages between systems. AMQP is the default protocol used in Azure Service Bus. AmazonMQ and RabbitMQ also support AMQP and are the main standards for event messaging protocols.

Competing Consumer Pattern – Multiple consumers are ready to process messages from the queue.

Priority Queue pattern - Messages have a priority and are processed in order of priority.

Queue-based load levelling.

Saga design pattern is a way to manage data consistency across microservices in distributed transaction scenarios. Similar use case to 2PC, but different.

2PC (Two-phase Commit): A simple pattern to ensure multiple distributed web services are all updated, or no transaction is done across the distributed services.

Throttling pattern

Retry pattern - useful for ensuring transient failures are corrected,

The

Twelve-Factor App methodology is a methodology for building software-as-a-service (SaaS) applications.

Capability-Centric Architecture (CCA): structures a system around business capabilities, with each capability implemented as an independent, self‑contained unit

Domain-Driven Design: By focusing on the core business domain and modelling it with clear, meaningful concepts.

Hexagonal Architecture: A system design where the core logic sits in the middle and communicates with external systems through ports and adapters.

Clean Architecture:

Streaming/MessageBus: Kafka, IoT,

Azure Messaging Service is made up of 6 products:

1. Service Bus—Normal ESB. Messages are placed in the queue, and one or more apps can connect to or subscribe to topics.

2. Relay Service—This is Useful for SOA when you have infra on-prem. It exposes cloud-based endpoints to your on-prem Data sources.

3. Event Grid - HTTP event routing for real-time notifications.

4. Event Hub - IoT ingestion, highly scalable.

5. Storage Queues - point-to-point messaging, very cheap and simple, but very little functionality.

6. Notification Hub -

Azure Durable Functions - Azure Functions are easy to create logic, but are not good at long-running or varying-length duration functions. To get around the timeout limits, there are a couple of patterns for Functions that improve their ability to handle long-running operations. The most common patterns are: Asyn HTTP APIs (Trigger a function using HTTP, set off other functions, and the client waits for an answer by polling a separate function for the result), Function Chaining (Execute functions sequentially once the last function completes), and Fan-out/Fan-in (first function call multiple functions that run in parallel)

Lambda: great for large data architectures. Has a batch vs streaming concept. Each transaction is pushed into a queue/stream (Kafka/Azure Queues/Azure Event Grid), and large data can be stored for later batch processing.

"Onion Architecture is based on the inversion of control principle. Onion Architecture is comprised of multiple concentric layers interfacing with each other towards the core representing the domain. The architecture does not depend on the data layer as in classic multi-tier architectures but on the actual domain models." Codeguru.com

Distributed Application Runtime, Dapr: Video - Event-driven portable runtime for building distributed applications on the cloud. Open source project tries to support any language/framework, consistent portable APIs, and extensible components that are platform agnostic. HTTP API. Secure Service to service calls, state management, publish and subscribe (For Azure ESB, Azure Queue), resource binding (Azure Functions), observability (Azure Signal-R service), Secret stores (Azure Key Vault) components to provide specific functionality. Building blocks are made up of components.

Cell Architecture: a collection of components that are connectable and observable. Cell Gateway is basically the service exposed. Similar to APIM/API Gateway. 1 or more cell components make up Cell Gateway for ingress data. Egress is done using Sidecar (App Insights is a great example of a sidecar service pattern). It is basically API first architecture.

SAST/DAST: are application security testing methodologies used to find application vulnerabilities. Another threat modelling approach is

STRIDE.

DACI (Decision Making Framework): stands for "driver, approver, contributor, informed", used to make effective and efficient group/team decisions.

OpenAPI vs GraphQL

OpenAPI specification (previously known as the Swagger specification) is my default for an API, this allows for a known RESTful API that anyone with access can use. Open API has set contracts that return defined objects, which is great, you can work with the API like a database with simple CRUD operations as defined by the specification. The issue is that the returned objects are fixed in structure, so you may need 2 or more queries to get the data you are looking for. Alternatively, GraphQL allows developers to request data exactly as they want it.

Open API example:

/api/user/{2} returns the user object // Get the user object for user 2

/api/users/{2}/orders/10 // Returns the last 10 orders for the user

GraphQL example:

Post a single HTTP request.

query {

User(id: "") {

name

email

orders(last: 10 {

orderid

totalamount

datemodified

}

}

}

You can see that GraphQL is potentially a better choice for complex changing systems. I also like the idea of using HASURA for ORM against PostgreSQL (hopefully SQL Server and others).