Series

App Insights for Power Platform - Part 1 - Series Overview

App Insights for Power Platform - Part 2 - App Insights and Azure Log Analytics

App Insights for Power Platform - Part 3 - Canvas App Logging (Instrumentation key)

App Insights for Power Platform - Part 4 - Managed Environment Logging (New 2025-05-09)

App Insights for Power Platform - Part 5 - Logging for APIM (this post)

App Insights for Power Platform - Part 6 - Power Automate Logging

App Insights for Power Platform - Part 7 - Monitoring Azure Dashboards

App Insights for Power Platform - Part 8 - Verify logging is going to the correct Log analytics

App Insights for Power Platform - Part 9 - Power Automate Licencing

App Insights for Power Platform - Part 10 - Custom Connector enable logging

App Insights for Power Platform - Part 11 - Custom Connector Behaviour from Canvas Apps Concern

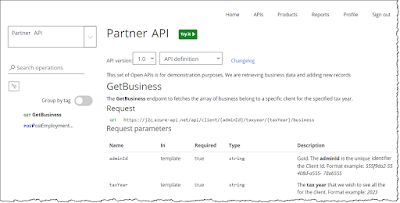

Overview: APIM is often part of you Power Platform solutions, such as monitoring and controlling all inbound and outbound traffic or to wrap over Azure functions.

Within APIM you can add multiple App Insights Instances. You can send all logging to a single instance an override specific API's to log to different instances. Making the logging nice and granular.

|

| Setup Logging |

The diagram below is where i used the operation Parent Id to find a log entry using the Transaction Logs in App Insights I can see the APIM entry and the entry to the backend 3rd party and their http response

You can hook up so you can see the Canvas App Session, then the function call, which calls APIM, and then see the backend call to the gov 3rd party API.

- Logging can be global or set at the API level in APIM.

- Telemetry "Sampling" will log a percentage of requests.

- "Always log errors" captures any errors APIM gets.

- Headers and body are not included in logs unless you specify them.

Series

App Insights for Power Platform - Part 1 - Series Overview

App Insights for Power Platform - Part 2 - App Insights and Azure Log Analytics

App Insights for Power Platform - Part 3 - Canvas App Logging (Instrumentation key)

App Insights for Power Platform - Part 4 - Model App Logging

App Insights for Power Platform - Part 5 - Logging for APIM (this post)

App Insights for Power Platform - Part 6 - Power Automate Logging

App Insights for Power Platform - Part 7 - Monitoring Azure Dashboards

App Insights for Power Platform - Part 8 - Verify logging is going to the correct Log analytics

App Insights for Power Platform - Part 9 - Power Automate Licencing

App Insights for Power Platform - Part 10 - Custom Connector enable logging

App Insights for Power Platform - Part 11 - Custom Connector Behaviour from Canvas Apps Concern