Problem: Create an Open API Specification (OAS) endpoint for testing using APIM

Background:

- Azure has a great service to bring multiple API's under a single publishable layer (think MuleSoft). I like to use APIM to setup an initial contract that developers can use before setting up the actual API. This allows both the consumer teams and the back-end development team to work independently to this OpenAPI agreed contract.

- Swagger originally owned and ended up creating the OpenAPI specification (OAS) that now of companies now use.

- Swagger has great tooling for creating OAS API's, documentation, stub hosting and generating code such as .NET core that you can import into your dev environment.

- Azure has a developer APIM instance licence that is relatively inexpensive (creating an APIM instance takes up to 20 minutes) but leaving it running for my personal dev is pretty expensive.

Overview: This post outlines the steps to setup an APIM instance using an OpenAPI file created in swagger. The APIM service shall be setup to return mock data.

Steps:

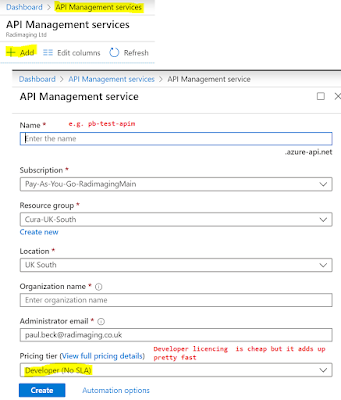

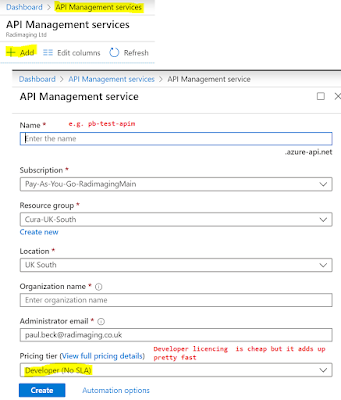

1. Create a new instance of APIM - Do this first as it takes up to 20 minutes before the service is ready.

|

| 1. Create an APIM instance |

2. Open swagger.io in a browser and signup, "Create New", this gives you your starting OAS file for your custom API.

|

| 2. Login to Swagger and create the Open API file using YAML |

3. Using the Swagger Editor, create the desired endpoints. It took me a few hours to get use to YAML as it is space sensitive but very readable.

|

| 3. Swagger editor |

4. On the top right of the Swagger editor is the "Export" option. Click Export > Download API > JSON Unresolved. And keep the .json file ready to import into your APIM service.

5. Open APIM, and add a new API. APIs > Add API > Open API as show below.

|

| Import OAS file into APIM |

6. Import the file and the fields get populated per you instructions.

|

| Upload the OpenAPI json file into APIM |

7. The list of operations shows up - in my case i only have a single GET operation call \Get Customers

|

| APIM Service is now created but not connected to a back-end |

8. Add Mocking to the end point. Highlight the Operation i.e. \Get List all Customers > Inbound Processing (Add Policy) button. Select "Mock-responses" > Save

9. Generate the JSON responses:

a) Select the Operation i.e. \GET List all customers

b) Frontend drop down > Form-based editor

c) Click the "Responses" tab

d) Click the "200 OK" link

e) Click in the Sample Box, and "Auto Generate", Save

The APIM service is now setup and we are ready to test.

1. Test using APIM's Testing tool

Check the 200 Response

|

| Mocked response from APIM test tool |

2. Testing using Postman

Open Postman, and craft the request as shown below:

|

| Postman APIM testing |

Note: There are several competitor products like Mulesoft, Amazon API gateway, Postman and Swagger also offer a lot of these features. There are other products that I have not used such as

Kong API Gateway, GCP has

Apigee,

Gartner has a list of competitors and the magic quadrant done each year.