Message Queues have been around for many years and I've implemented message queue using: SonicMQ, MSMQ, IBM MQ. I like to keep my architecture as simple as possible so I still use queues but deciding between your "eventing" architecture brings Enterprise Service Buses (ESB) into picture and all the other players. The last 2 native PaaS players for messaging are Event Grid and Event Hub (IoT). It come down to what you are trying to achieve, are you going to need more functionality later, what does you business have available and the skills you have to work with.

I like Azure Storage Queues, they are cheap, simple to setup and understand so for simple message queue capability:

Azure Storage Queues sit on Azure Storage (Type Queue Storage). Multiple queues can be on a single Queue Storage Account, and must be named in lowercase. Messages up to 64kb. Order of queued messages is not guaranteed. Max message lifespan is use to be 7 days (default is 7 days), now

maximum time-to-live can be set to -1 meaning never expire. SAS token to pragmatically access. REST API to add and pull from the Queue (also has a peek API).v Azure Storage supports "Poison queue" so when the "Dequeued count" exceeps the threshold set on the queue, the message is moved into the "Poisoned Queue". Pricing is pretty cheap and LRS is the cheapest with GA-GRS (Geo Redundant storage) being the most expensive.

Get a message from the queue and amend the message to wait 60 seconds (Code Source: Microsoft Docs)

QueueClient queueClient = new QueueClient(connectionString, queueName);

QueueMessage[] message = queueClient.ReceiveMessages();

queueClient.UpdateMessage(message[0].MessageId,

message[0].PopReceipt,

"Updated contents",

TimeSpan.FromSeconds(60.0)

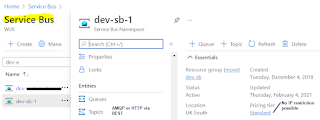

Azure Enterprise Service Bus (ESB), is a fully managed ESB service. Allows for standard queues (point-to-point) or topics also called pub-sub (point-to-multipoint) messaging. Has two external connectivity options use Hybrid connections (webSockets) over Azure Relay. Messages up to 256kb except Premium up to 1MB message size allowed in queues, In topics, I think, the max message size allow is 100MB. Unlimited lifespan. Dead lettering option. Programmatic access via SAS token, AAD. Supports access via REST or AMQP (used for many years as the standard for Message Queues). Has Duplicate message detection (ensures "At-most-once" delivery).

Competitor options for Azure Enterprise Service Bus including message exchanging technologies: AWS SQS, GCP Pub Sub, NATs, Oracle ESB, JBoss Fuse, Mule ESB (from Mulesoft), IBM Websphere ESB, BizTalk, Azure EventGrid, Azure Storage Bus, Sonic ESB and I guess all the message ques link SonicMQ, IBM MQ.

Update 31 Jan 2022:

Problem: Messages are pushed onto the topic but are taking between 5 and 35 minutes to process. The listener was an app registered on Service Fabric, and this started happening after we rebuilt a new instance of Service Fabric. The Service Bus still work but 1 subscriber was taking this extra 5-35 minutes from what was previously being processed within 1 second.

Resolution: As always, reboot :) I disable the topic and re-enabled it and the messages were being processed within 1 second. I could not find this behavior on google searches and after a lo fair amount of trying to check messages and configuration, a good old restart fixed the delay in message processing.

More Info:

NATS - Common ESB software gaining popularity