Overview: Dataverse is CDS, there is a long story on the naming but ultimately Dataverse is a data store with a advanced security model, Open API's, workflows, pipeline injection... It is awesome.

It is high performance, and would take considerable effort and components to deliver similar functionality or even semi close functionality. It does have limitations mainly around performance but don't let that fool you, Dataverse is fast and powerful but for massive industrialized storage it's not the right option. The costs are also a key consideration.

The biggest mistake I see is people making the same mistakes as they do with relational databases namely:

Poor Dataverse implementation down to 1) poor entity relationship design, 2) either too many table containing duplicate data or to few table being expanded for a dev teams capability but ignoring existing systems, 3) poor security 4) too many cooks.

Basically, like any Database service, you need to have owners and try keep the structure logical and expand it appropriately. The idea behind the data model used by the dataverse is to have centralized secure shareable data like customers or account information. It's simple, treat dataverse as you would your most precious core database, have an owner that needs to understand and approve changes.

Note: Microsoft have had some trouble naming Dataverse, it was previously known as the Common Data Service (CDS).

|

| Dataverse logo |

Overview: Dataverse helps improve processes. And Dataverse helps reduce time to build IT capability, remove shadow IT, improve security and governance. Data is the common data store we need to use to be effective. As part of the Power Platform, it allows us to build custom software fairly quickly.

|

| Updated 07-July-2022 |

Dataverse provides relation data storage (actually runs on Azure SQL (Azure Elastic Pools), Cosmos DB, and Blobs), lots of tools e.g. modelling tools. I think of it as a SQL database with lots of extra features. Most importantly business rules and workflow.

- Dataverse relies on AAD for security

- Easy data modelling and supports many-to-many relationships NB!

- Easy to import data using PowerQuery compatible data sources

- Role-based data (previously called row) and column (previously called fields) level security. See Dataverse security in a nutshell at the bottom of this post.

- Provides a secure REST API over the Common Data Model, it's awesome

- Easy to generate UI using PowerApps model driven app

- Ability to inject business rules when data comes in or out of the Dataverse (can also use .NET core code)

- Can also stored files (ultimately in blob storage)

- Search that indexes entities and files

- CDS used tables, Dataverse calls them Entities. Some of the UI still refers to table. Just assume Entity and Table are interchangeable terms.

Dataverse basically allows you to have a PaaS data hosting service that mimics what we have done for many years with databases and Open API, has advance features and tooling and it is all securely managed for you.

The cons are basically: is that it is expensive. So you need to know your size and keep buying add-ons to the plans. Scaling Dataverse is expensive.

Common Data Model: Collection of tables (previously called entities and most CRM people still call them entities) e.g account, email, customers for a business to reuse. Comes from CRM originally, the starting point consists of hundreds of entities pre-created. Common standard for holding data.

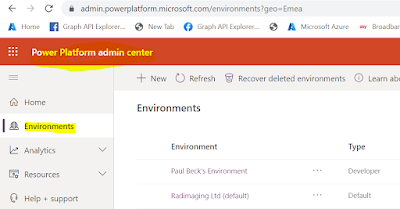

Each Power Platform Environment has a single Dataverse associate to it. It's a good idea to have more than one environment but at it's simplest, use a trial to learn and progress to production.

Once I have a new environment, I can use Power Apps to access my environments Dataverse and model out a new table to store info, I am storing people tax returns.

Go into the Dataverse and model directly

|

| Model the table in you Dataverse instance |

Dataverse Security in a Nutshell:

- A user is linked from AAD to the User entity in the Dataverse.

- User Entity record is aligned to the AAD User.

- AAD Users can be part of AAD security groups.

- Dataverse Teams (Dataverse Group Teams) can have Users and or Security Groups assigned.

- Dataverse Group Teams are aligned to Business Units.

- Business Units have roles (rights).

"Security is additive" in Dataverse (generally the whole MS and security world these days). i.e. no remove actions. If you have permission in any of the groups you can access the data/behavior.

Business Units used to restrict access to data. Can be hierarchical i.e. Enterprise > Audit > EMIA > UK (Don't use it like this, keep it simple)

Security Roles define a users permissions across the Dataverse entities i.e role can read only from Accounts entity

Teams consist of users and security groups. That get assigned roles. There are two types of Teams in Dataverse: Owner teams & Access Teams

Field-level security, only allows specified users to see the field data